Glad to have things back up though still looking into root cause, wouldn’t want to have to do this again.

Category Archives: Kubernetes

Kubernetes utilities

So many utilities out there to explore:

https://collabnix.github.io/kubetools/

Love how every k8s-at-home helm chart can pipe an application via a VPN sidecar through a few lines of yaml. And, said sidecar can have its own VPN connection or use a single gateway pod with the VPN connection, so cool!

The bitnami team also has a great standard among their helm charts, for example, consistent ways to specify a local repo and ingresses. Many works in progress.

Took at look at tor solutions just for fun, several proxies in kubernetes… as well as solutions ready to setup a server via an onion link using a tor kubernetes controller. Think I’ll give it a try.

This kube rabbit hole is so much fun!

xcp-ng & kubernetes

Decided to test out xcp-ng as my underlying infrastructure to setup kubernetes clusters.

Initially xcp-ng, the open source implementation of Citrix’s XenServer, appears very similar to vmware yet the similarities disappear quickly. VMware implemented the csi and cpi apis used by kubernetes integrations early on. These implementations are only becoming more evolved whereas xcp-ng is looking for volunteers to begin the implementations. Why start from scratch when vmware already has a developed solution?

What about a utility to spin up a cluster? Google, AWS, and VMware all have a cli to spin up and work with clusters… xcp-ng not so much, would need to use a third party solution such as terraform.

xcp-ng is great on a budget but lacks the apis needed for a fully integrated kubernetes solution. Clusters, and their storage solutions must be built and maintained manually. At this point I wonder how anyone could choose a Citrix XenServer solution knowing everything is headed towards kubernetes. But for a free solution with two or three manually managed clusters, yes.

Helm chart check list – work flow from dev to prod (always evolving)

Helm chart setup workflow

Dev

- Initially get things working with a default helm install into dev.

- Does it have ldap or oidc integration or some other reason to need to verify onprem CA chain? Yes, figure out how to get CA chain installed

- Setup oidc if possible, setup ldap if needed and oidc is not available.

- Is it possible to set values in helm chart to get CA chain installed as part of the helm install? Yes, modify yaml, no fork helm install and add steps, see if project owner is OK with pull request or not. (better in Integrate with helm chart than manage a fork).

- Configure something on server you want to persist.

- Helm uninstall and reinstall, did you lose the setting? … figure out steps to get data to persist.

- Try to increase the storage size of PVCs which might need to be increased in production? Is this possible without taking down the application? Figure out what is required for this use case which will inevitably come up in the future. Document and be ready. It may be wise to implement a pipeline for this purpose.

- Cordon involved node(s) and drain, uncordon node(s), was data lost when things came back up? Figure out how to ensure data persists.

- Does server have a method to export configuration or otherwise backup the server. Configure automated backups.

- Is it possible to Configure server as HA? Can it be configured for HA later or must it be configured from initial setup? Can a single instance be migrated to HA. Decide if HA needs to be setup or is a single instance good enough. If HA is desired then figure out how to set that up and go through this list again.

- Are there options to configure metrics for the application? Often these exist in helm installs. (lower priority when initially working to get something up)

- If there is an option to use a log aggregator set that up or possibly setup a sidecar with logging. (lower priority when initially working to get something up)

- Server is now ready to release into test.

Test

- Configure permissions of those with accounts accessed via oidc / ldap. Note a program which supports ldap but not oidc is not as evolved. Check for a plugin/extension if oidc does not appear to be available. Oidc enables almost any identity provider & SSO, and is always preferred.

- Does the minimum requested CPU and Memory match what’s actually needed?

- Someone needs to perform some manual testing or work on automated testing.

- If no one ever tries restoring from a backup, there is a good chance the process might not work, might want to try that out before there is a fire.

- No system may be released into production without an automated method of registering its ip in dns (e.g. external-dns) and also an automated method of updating its ssl certificates (e.g. cert-man), verify these work.

- Be sure to test rolling up to the next release of a helm chart as well as rolling back (and all tests still pass).

- If all testing passes then ready for production.

Production

- An update strategy needs to be established and followed just prior to release into production. Schedule: Monthly, quarterly, every 6 months, or upon release of a new version. Version: always run the latest, or version just prior to latest major release (and with all the updates). Some programs such as WordPress can/will update plugins automatically… is this ok?

- Generally automation is desired to roll something out into production. When an update is ready automation should be used to update first in dev, perform automated testing, then roll out into dev with the ok of someone (or automatically rolled out into prod if all tests passed and its decided that is good enough).

- Also, a pipeline for rolling back to a previous version is a good idea, in case a deployment to production fails.

(pull request) Contributing to open source, helm chart for taiga, ability to import an on prem certificate authority certificate chain

OIDC is always preferred if possible. At this time in history not all projects have OIDC support, though some can be extended via an extension or plugin to accomplish the goal. I’ve got enough experience to help projects get over this hurdle and get OIDC working. If I could be paid just to help out open source projects I might go for it.

Here’s a pull request for a taiga helm chart I’ve been using. I’ve been using taiga for years via docker and am happy to be able to help out in this way now that I’m using kubernetes and helm charts. In this case a borrowed a technique from a nextcloud helm chart and works perfectly for this taiga helm chart: https://github.com/nemonik/taiga-helm/pull/6

Like rebuilding a Shinto shrine

Traditionally Shinto shrines are rebuilt exactly the same next to the old shrine every so many years. The old shrine is removed and when the time comes it will be rebuilt again.

Something similar can apply to home environments. Recently I nuked everything and rebuilt from the ground up. Something I’ve always done after 6 months or a year, for security reasons and to ensure I am always getting the fastest performance from infrastructure.

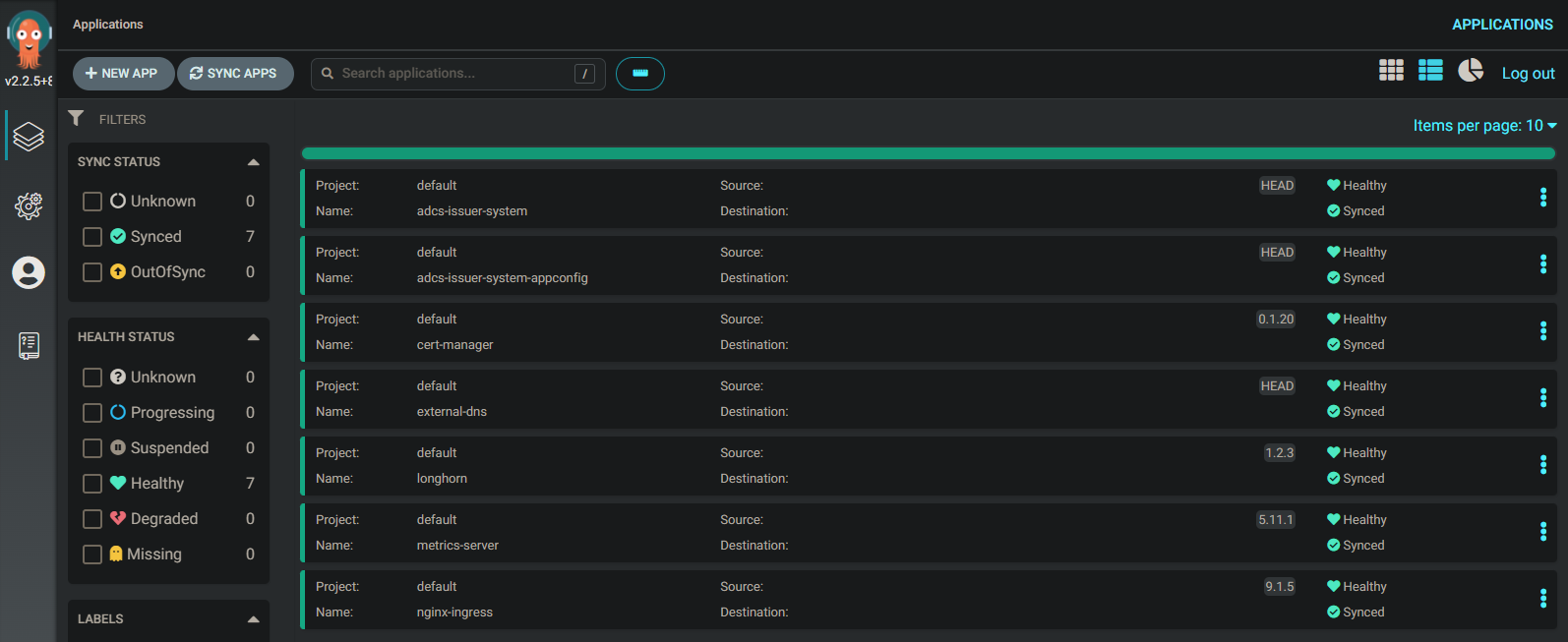

Such reinstalling is a natural fit for kubernetes. There are several methods for spinning up a cluster, and after that just by the nature of kubernetes being yaml files it is easy to spin up the services you had running before, and watch them self register in the new dns and self generate certificates with the new active directory certificate authority. Amazing. Kubernetes is truly, a work of art.

What is Kubernetes really?

As I take the deep dive into kubernetes what I’m finding is, though definitely a container management system, it can also been seen as a controller yaml processing engine. Let me explain.

Kubernetes understands what a deployment is, and what a service is, these are defined as yaml and loaded. Deployments and services can be seen as controllers which understand those types of objects defined in yaml.

What is interesting about this is that we can implement our own controllers. For example, I could implement a controller that understands how to manage a tic-tac-toe game. That controller could also implement an ai that knows how to play the game. In the same way you can edit a deployment you could edit the game and the kubernetes infrastructure could respond to the change. Or, a move could be another type recognized by the game controller, so you could create a move associated with a game in the same way you can create a service associated with a deployment.

You can imagine doing a ‘k get games’ and seeing the games being played listed out. As well as ‘k describe game a123’ to get the details and status of the game.

Seems I’m not the only one who has started thinking down this line. A quick Google search reveals agones.

This is fascinating and gives me a lot of ideas on how I might reimplement my list processing server & generic game server, within the kubernetes framework.